Why AI Vision Models Struggle with Technical Analysis

Deep Charts Newsletter #1

Recently, I posted a video which proposed the concept of using LLM vision models to interpret stock market technical indicator charts, with Meta’s Llama 3.2 Vision model as a case study. The idea itself is fascinating: could an advanced vision-language model analyze stock charts in a manner comparable to a human trader? Unfortunately, the actual implementation of the Llama 3.2 Vision model was less than impressive. The insight it provided was mostly surface-level and, at times, entirely incorrect, consistent with findings from recent research I’ve come across.

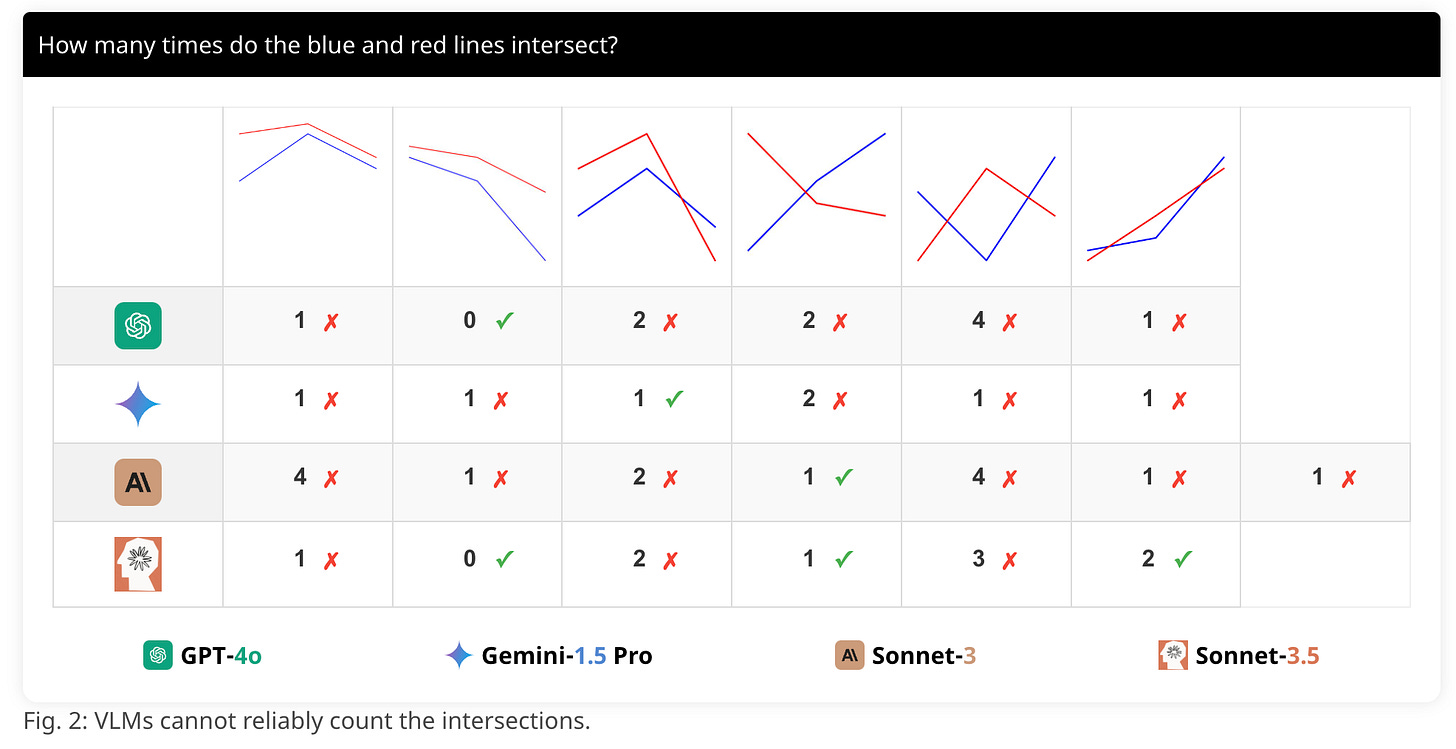

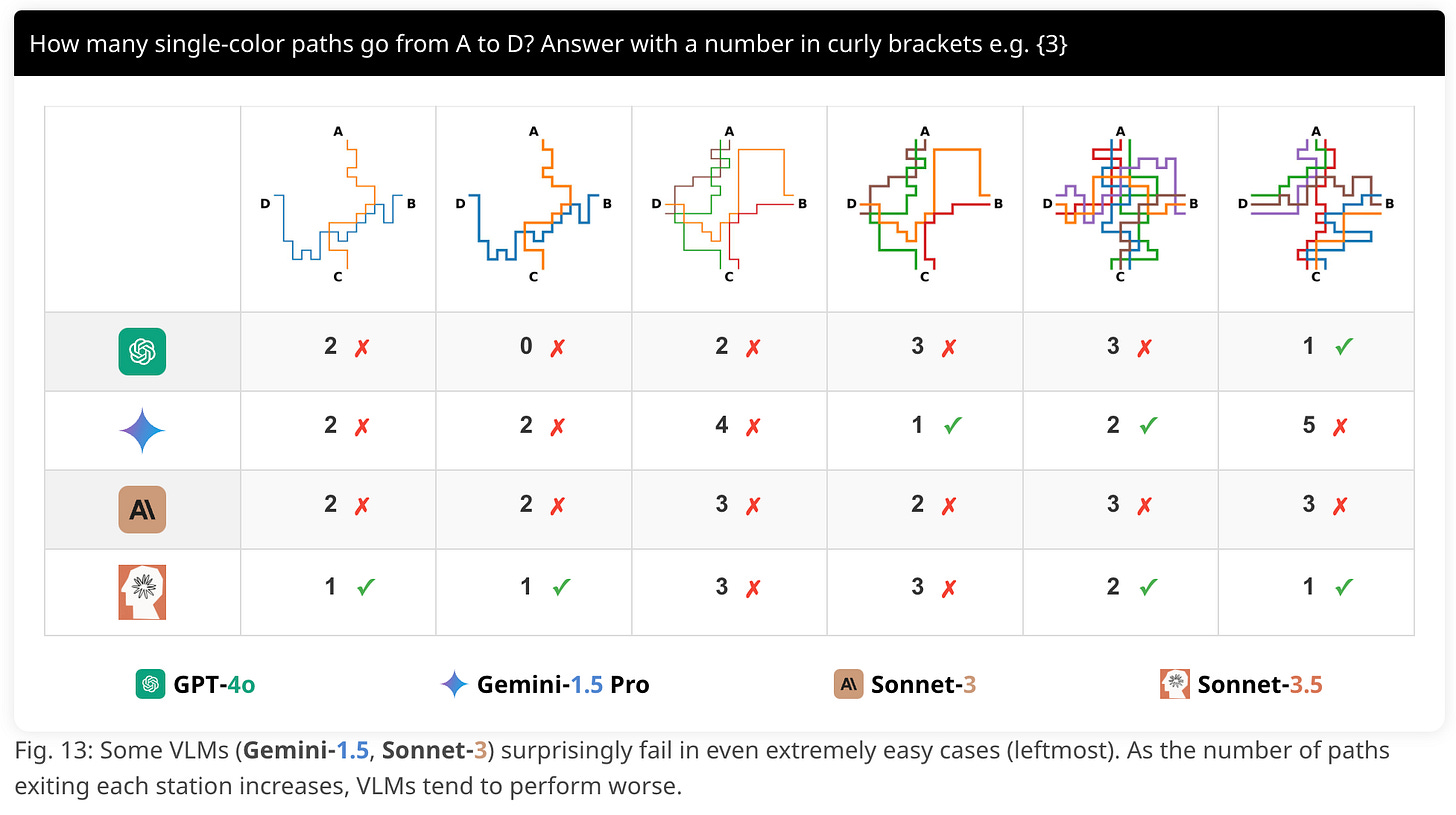

A recent study, Vision-Language Models are Blind, highlights a fundamental issue. Large vision-language models (VLMs) excel at understanding text in natural scenes or providing basic object recognition. But when faced with more structured visual data—like charts, graphs, or tables—their performance takes a nosedive. Why? These models aren’t inherently built to reason through the spatial relationships or precise semantics embedded in visual abstractions like a candlestick chart or an MACD indicator.

Multimodal models like Llama 3.2 Vision try to bridge the gap between text and images, but they lack domain-specific reasoning capabilities. Recognizing a dog in a photo isn’t the same as parsing a double-bottom pattern or identifying stochastic divergence. These tasks demand fine-grained visual understanding, numerical reasoning, and often contextual knowledge about how financial markets work.

Interestingly, Google’s Gemini 2.0, which I haven’t tested yet for this task, is said to address some of these weaknesses. But let’s not leap to conclusions. The fundamental limitation may still be that these models are generalists, optimized for breadth rather than depth. They can "see" and "read," but they’re far from actually understanding specialized visual data.

These AI tools aren’t yet ready to function without human oversight, especially for technical analysis, which also relies on a high degree of belief in the method itself. Assuming they are accurate even a fraction of the time carries significant risk. And while future vision models may eventually make this kind of automated technical analysis practical, markets will likely adapt quickly, leaving only a short window of time to take advantage of this technology.

the challenge is LLM in the spatial dynamic -- but flip that ambition & apply it to a cross sectional mindview of tabulated, non integer & integer descriptors. there is the quest.